The Cons

March 3, 2023

“Write a literary analysis for 1984,” When you type this prompt into ChatGPT, a new infamous product released by OpenAI, the bot immediately crafts an essay with the given context. At first glance, the analysis might appear authentic, but you will quickly notice its overly repetitive reasoning and monotonous style. Yes, writing follows the basic rules of English, but no, this isn’t the robot that will finish your homework.

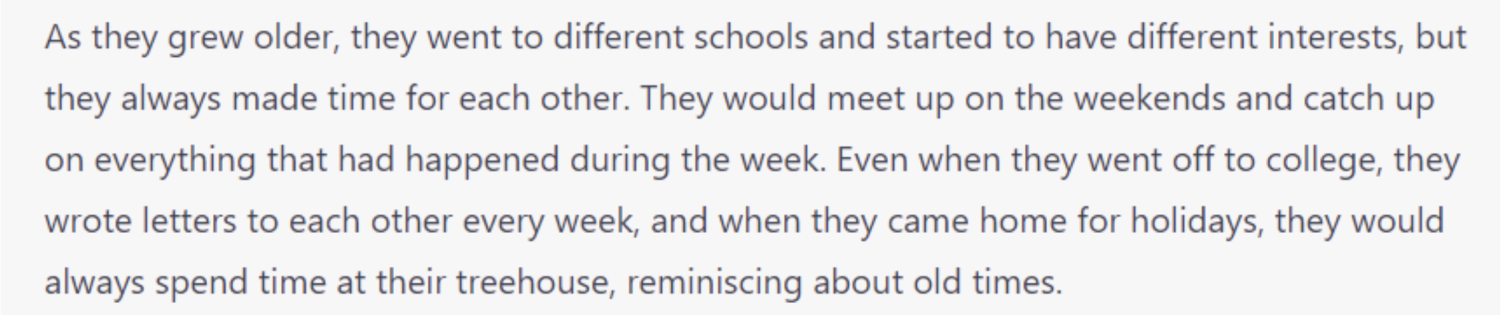

When given creative prompts, ChatGPT typically writes a short story under its word count that quickly explains the basic plot of the narrative through minimal literary devices. Unless you request literary craft, such as metaphors, the bot won’t use any. This unnatural writing style is exacerbated by superficial dialogue and “telling” the reader what the characters are doing instead of “showing.” The lack of necessary details already fails to meet the requirements for most high school English classes and creates a prosaic piece of writing.

For instance, when I directed ChatGPT to write “a creative fiction about childhood friends,” the bot produced a few paragraphs more likely to be in a magazine interview about two celebrity best friends. By writing, “They would meet up on the weekends and catch up on everything that had happened during the week,” the bot misses an opportunity to elaborate on a specific moment, entice the reader, or convey creative details rather than a vague description that detrimentally neglects all five senses. The lack of sophisticated vocabulary and tone also results in an impassive commentary that is neither a fictional writing style nor a journalistic report. It’s a lukewarm, incompetent, and disappointing piece.

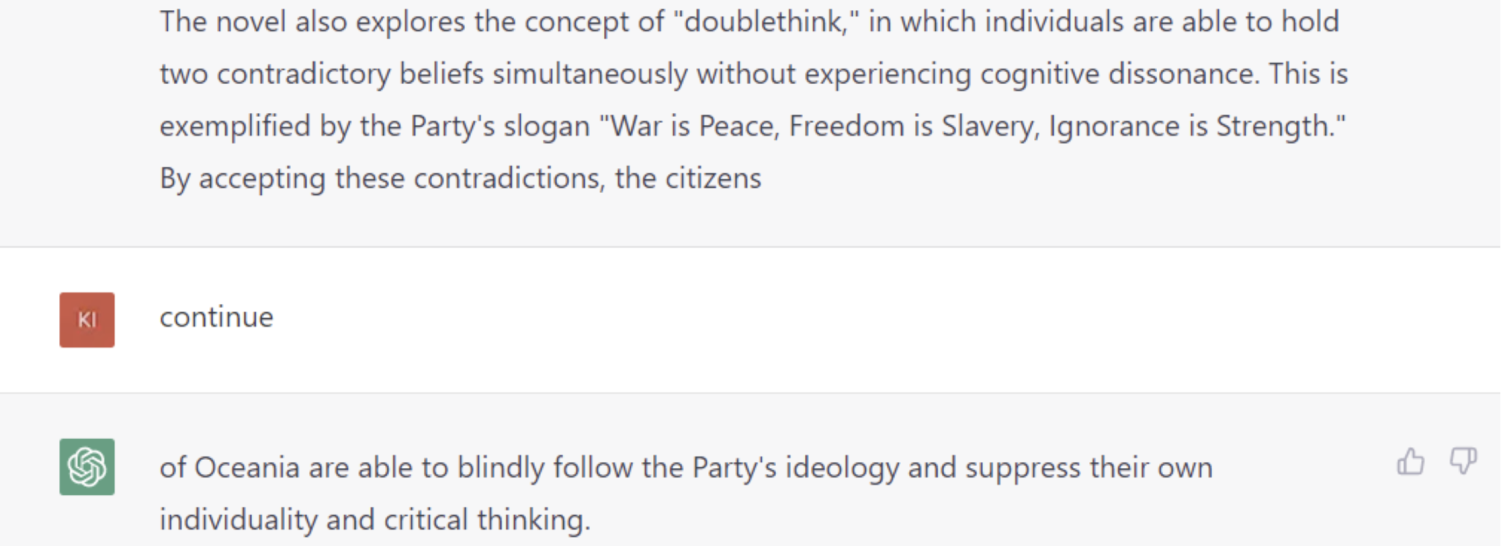

Some could argue the creators of ChatGPT designed it for persuasive essay prompts rather than creative work, but it lacks crucial parts of analysis in the 1984 piece. This is undoubtedly better than the creative fiction, but still mediocre. The bot refuses to add evidence or examples that support how the Oceania citizens suppress their thoughts or reasoning as to why the people blindly accept these conditions. Although it correctly identifies the slogan of the Party, ChatGPT fails to explain the use of the slogan or how it pertains to the government’s relation to the people. The bot’s vague analysis only raises another question: how does any of this contribute to censorship, real life, or totalitarianism?

Even if technological innovation later advances creative AI skills, this invokes a new dilemma that questions the inherent motivation to attend school: why learn to think and write if an AI robot could easily replace students? Unfortunately, students feel this contradiction because an AI spends less time and effort significantly improving its writing skills.

New, unimaginative technology, like ChatGPT, uses trends to attract ephemeral viewers, displaying the aforementioned “doublethink” irony that results from its artificial nature. After a while, students and others will find the bot inept, move on, and write their work. Regardless, suppose students use ChatGPT now to complete their assignments. In that case, it will inevitably reduce the quality of their work by encouraging students to rely on the internet and others’ opinions and poke fun at how much time and effort students dedicate to their writing.

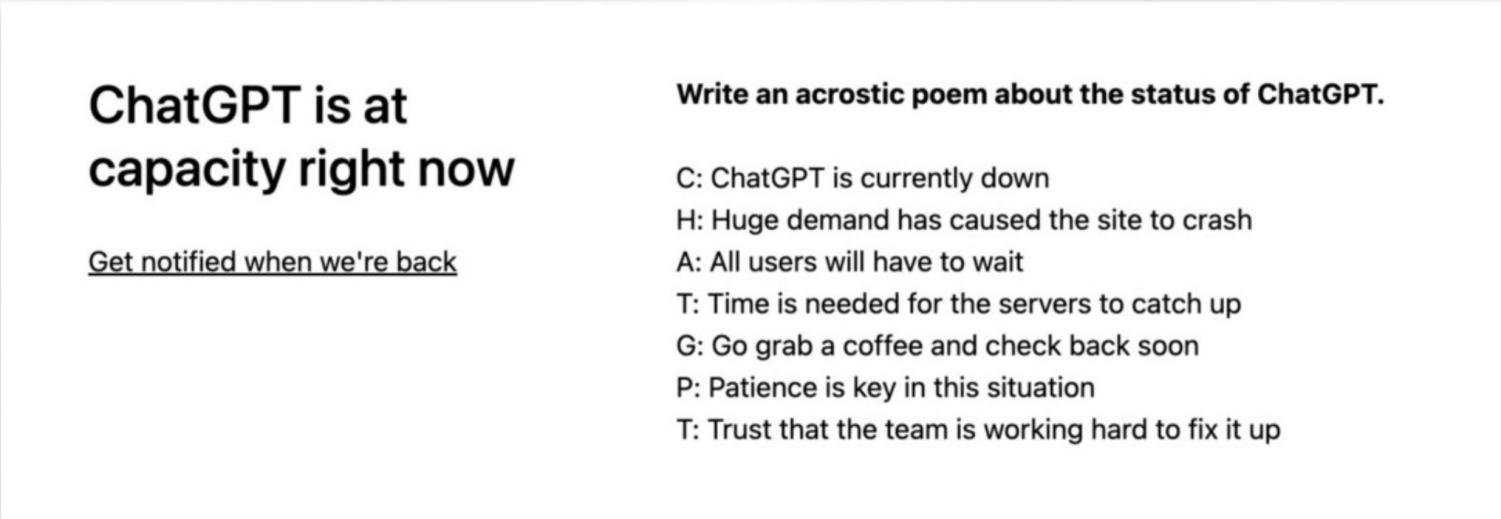

As ChatGPT continues to crash from transient high demand, students and schools are in luck until the bot becomes available to the public.